PCIe Considerations for Multi-GPU Builds

Written by:

Dave Ziegler

President at Ziegler Technical Solutions LLC

Welcome to my DIY AI series, where we explore considerations and best practices for building small-scale home, educational, and small office AI systems. In this post, we'll cover power considerations for building small AI workstations and servers for residential and small office lab environments.

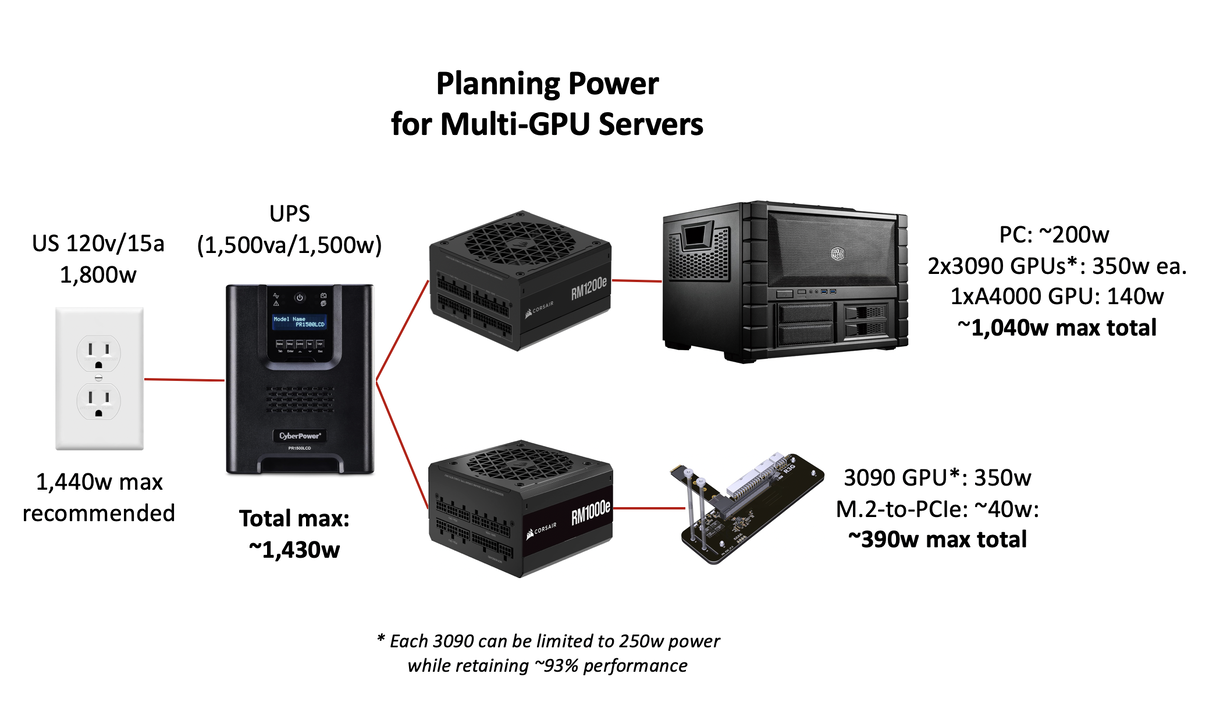

TL;DR: Make sure every part of the power chain (main supply, circuit, outlet, UPSs, and power supply(ies) is sufficient for max loads and power spikes. Consider reducing CPU core count and memory modules to allow more power for GPUs. GPUs may be power limited or undervolted without drastic impact to performance.

Verify the capability of your main supply, circuit breaker, and outlet. In the US, rooms/zones often share a single 120V/15A circuit capable of 1,800 watts with 80% max utilization suggested (1,440 watts). 20A circuits may be installed accommodating up to 2,400 watts.

Use a power supply calculator that can account for all components (motherboard, CPU, memory, storage, GPUs, other peripherals, case fans, and coolers.

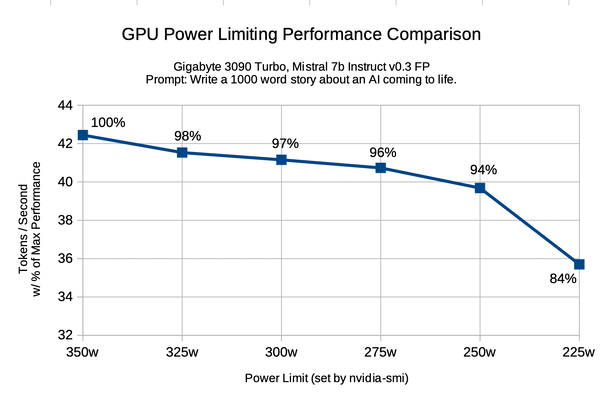

It is possible to reduce the power of NVIDIA GPUs (with nvidia-smi command line tool) without greatly impacting performance. A 350w 3090 GPU can be limited to 250w while retaining ~94% performance. It is also possible to undervolt GPUs depending on model and OS, which requires more stability testing but can retain even more performance with lower power usage.

To be safe, your power supply(ies) should be able to handle the max load of all components plus have some additional headroom for spikes. If using Uninterruptible Power Supply(ies), make sure they can accommodate the max load of all connected equipment. Consider power requirements of CPUs and memory configurations.

Inference is not heavily dependent on CPU or conventional memory, and you may be able to save as much as 100w by selecting a lower core CPU or fewer memory sticks. While it’s possible to use an external power supply to power internal GPUs directly connected to the motherboard’s PCIe bus, keep the following in mind:

- Proper grounding is essential to prevent equipment damage and electrical hazards.

-Both power supplies must be grounded consistently to avoid differences in electrical potential.

It is crucial to ensure that power-up and power-down sequences are correctly followed to prevent component damage.

Conclusion

(by Gyula Rabai)Building a small-scale AI workstation or server for home, educational, or small office use requires careful attention to power management to ensure efficiency, safety, and performance. By verifying the capacity of your main supply, circuit breakers, and outlets, and utilizing tools like power supply calculators, you can create a system tailored to your needs while staying within safe power limits.

Strategic choices—such as reducing CPU core counts, limiting memory modules, or power-limiting and undervolting GPUs—offer practical ways to optimize power allocation, particularly for GPU-intensive tasks like AI inference. Additionally, ensuring proper grounding and power sequencing when using external power supplies can safeguard your equipment from damage.

By following these best practices suggested by Dave, you can build a reliable and cost-effective AI system that balances performance with power efficiency, making it well-suited for residential or small lab environments.