PCI express considerations for Multip-GPU builds

Written by:

Dave Ziegler

President at Ziegler Technical Solutions LLC

Welcome back to my DIY AI series, where we explore considerations and best practices for building small-scale home, educational, and small office AI systems. In this second post, we'll cover PCIe as it relates to selecting motherboards, CPUs, and GPUs when building small AI workstations for hobby, education, and small office use.

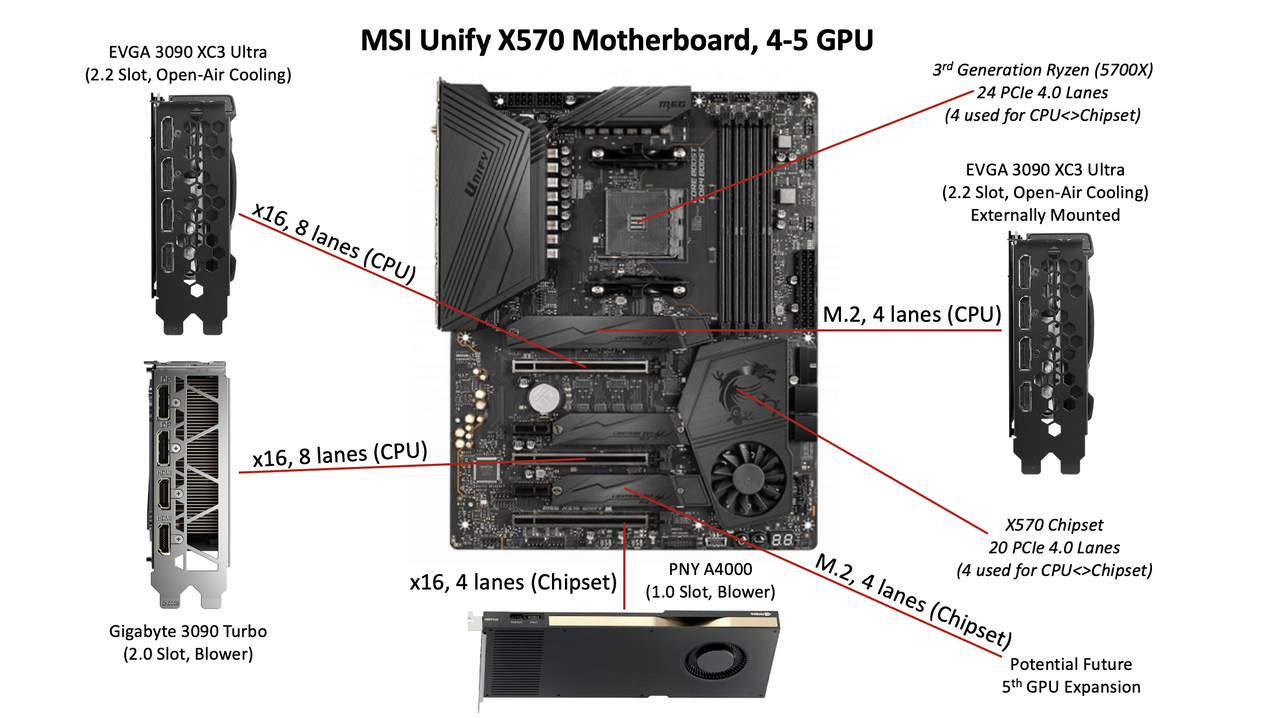

TL;DR: Don’t choose your motherboard or CPU based on the number of physical slots. Not all physical PCIe x16 slots are the same! GPU throughput is dependent on both the PCIe generation and number of lanes allocated to a PCIe slot, which are in turn dependent on CPU, chipset, and motherboard design.

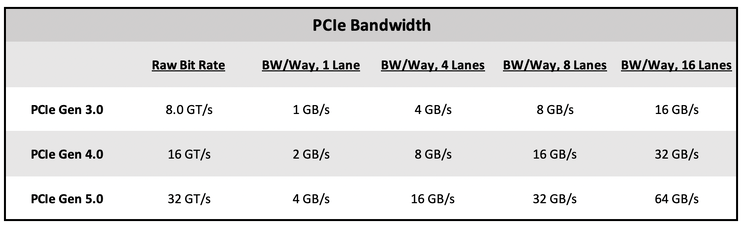

There are currently six generations of PCIe (1.0, 2.0, 3.0, 4.0, 5.0, 6.0), with each generation doubling the bandwidth of the previous generation since 3.0 (Figure 2).

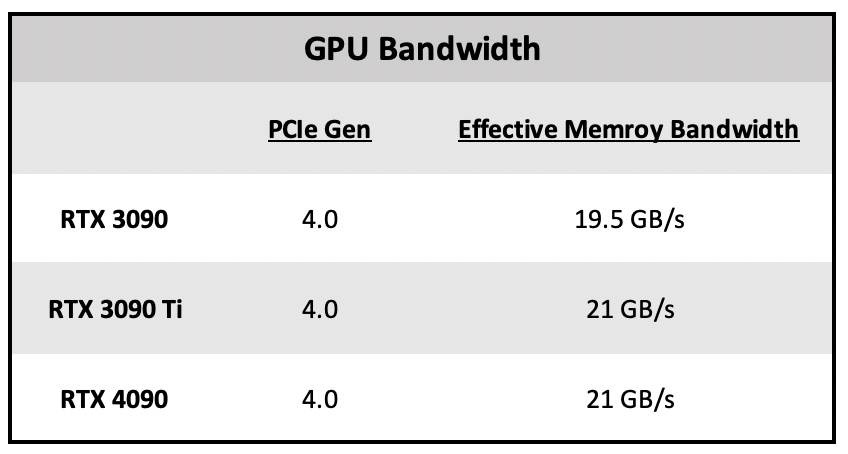

The PCIe generation(s) supported by a system depends on the CPU, chipset, and motherboard. Different physical PCIe slots on a motherboard might be of different generations depending on whether they are connected to PCIe interfaces on the CPU or chipset. Most modern GPUs at the time of this writing, including all NVIDIA Ampere and ADA series commonly used for LLM applications, utilize PCIe 4.0.

Note: At the time of this writing, there are no PCIe 5.0 GPUs despite early press releases claiming NVIDIA’s 4000 series and AMD’s 7000 series would be PCIe 5.0. However, NVIDIA’s 5000 series and newer workstation GPUs are expected to be PCIe 5.0.

Newer GPUs are backwards compatible with older PCIe generations but will be limited to the speeds of the older generation. So, if you only have PCIe 3.0 slots on your motherboard, you can still use a more modern GPU at reduced throughput. Newer motherboards support older GPUs (older PCIe generations), but these will be limited to their generation’s throughput and won’t benefit from the newer generation’s increased bandwidth. GPU throughput is dependent on both the PCIe generation and number of lanes allocated to a PCIe slot (see below for some examples and compare with PCIe Bandwidth Chart above).

The existence of physical x16 slots does not mean the slot provides all 16 lanes, or max throughput. In many cases, the number of lanes may be reduced or slots may be disabled if multiple slots or other on-board peripherals are also in use.

While GPUs generally require a physical x16 slot, they will operate with 16, 8, 4, or even just 1 lane. The throughput will be limited by the PCIe generation and number of lanes available to the GPU.

Note: For LLM inference, where throughput is not the bottleneck, using fewer lanes (and therefore lowered throughput) will mostly affect loading times. For training, where there is much intercommunication between multiple GPUs, operating at less than 8 lanes will greatly increase training time.

The total number of lanes, allocation of lanes to PCIe slots and on-board devices, and PCIe generation of each slot will depend on the CPU and chipset, both of which provide PCIe lanes. It is important to check the motherboard’s manual and BIOS settings to verify what slots are active in any configuration, and how many lanes are available to each slot.

Slot spacing is also a key factor, particularly for multiple GPUs. Make sure you configuration can accommodate your GPUs (some of which can be up to 4 slots wide), leaving enough room for cooling and airflow.

If a motherboard has an available M.2 slot, it can be used (at a max of 4 lanes) to connect an external GPU using a direct M.2-to-PCIe adapter or M.2-to-OCuLink adapter and OCuLink external PCIe mount. OCuLink (Optical Copper Link) is a different interface to PCIe and provides a way to extend PCIe connections over a cable. These solutions require a separate power supply for the external GPU(s).

If a motherboard supports Thunderbolt, there are numerous external chassis that can be used for GPUs. It’s important to ensure the power supply in the chassis can handle the GPU.

Don’t forget to make sure your power supply is adequate and has enough GPU power connections (either PCIe 6+2 or 600W 12VHPWR). For modular power supplies, you may need to order additional cables. And, 12VHPWR to dual PCIe 6+2 may also be purchased if necessary.

Conclusion

(by Gyula Rabai)Building a multi-GPU system for AI workloads requires careful consideration of PCIe specifications to ensure optimal performance and compatibility. As Dave has explained, selecting the right motherboard, CPU, and GPUs goes beyond simply counting physical PCIe x16 slots. The PCIe generation, the number of lanes allocated to each slot, and the overall design of the CPU and chipset play critical roles in determining GPU throughput—a key factor in both inference and training tasks. For hobbyists, educators, or small office users crafting small-scale AI workstations, overlooking these details can lead to bottlenecks, whether it’s slower model loading times with fewer lanes or extended training durations in multi-GPU setups.

Beyond bandwidth, practical aspects like slot spacing, cooling, and power supply capacity are equally vital. GPUs can be power-hungry and physically large, so ensuring adequate airflow and sufficient wattage—along with the right power connectors—is non-negotiable. For those needing flexibility, options like M.2-to-PCIe adapters or Thunderbolt external chassis provide creative ways to expand GPU capacity, albeit with their own trade-offs and requirements.

Ultimately, the key to a successful multi-GPU build lies in thorough research and planning. Always consult your motherboard’s manual and BIOS settings to confirm lane allocations and slot functionality in your chosen configuration. Also it is wise to do some reasearch on the Internet, or you can ask me (Gyula), or Dave for advise. By aligning your hardware choices with your specific AI goals—whether it’s LLM inference or training—you can maximize performance without overspending or overcomplicating your setup. With the right approach, your DIY AI workstation can deliver the power and efficiency needed to bring your projects to life. Stay tuned for more insights in this series as we continue to demystify the art of building AI systems at home or in your office!